On - [4th February, 2025] - TechCrunch’s senior reporter Natasha Lomas wrote this article about LogicStar.

The text of the article is quoted below: “ Swiss startup LogicStar is bent on joining the AI agent game. The summer 2024-founded startup has bagged $3 million in pre-seed funding to bring tools to the developer market that can do autonomous maintenance of software applications, rather than the more typical AI agent use-case of code co-development.

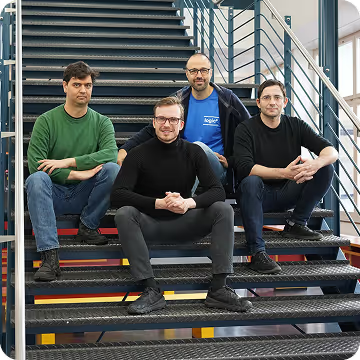

LogicStar CEO and co-founder Boris Paskalev (pictured top right, in the feature image, with his fellow co-founders) suggests the startup’s AI agents could end up partnering with code development agents - such as, say, the likes of Cognition Labs’ Devin - in a business win-win.

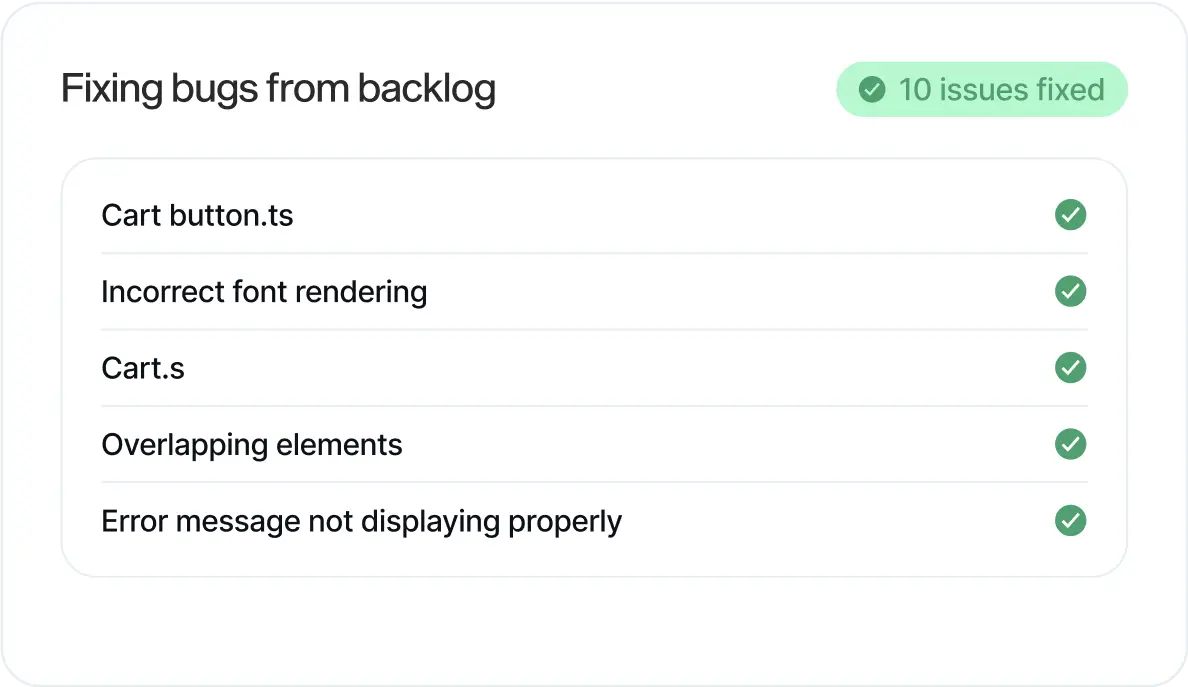

Code fidelity is an issue for AI agents building and deploying software, just as it is for human developers, and LogicStar wants to do its bit to grease the development wheel by automatically picking up and fixing bugs wherever they may crop up in deployed code.

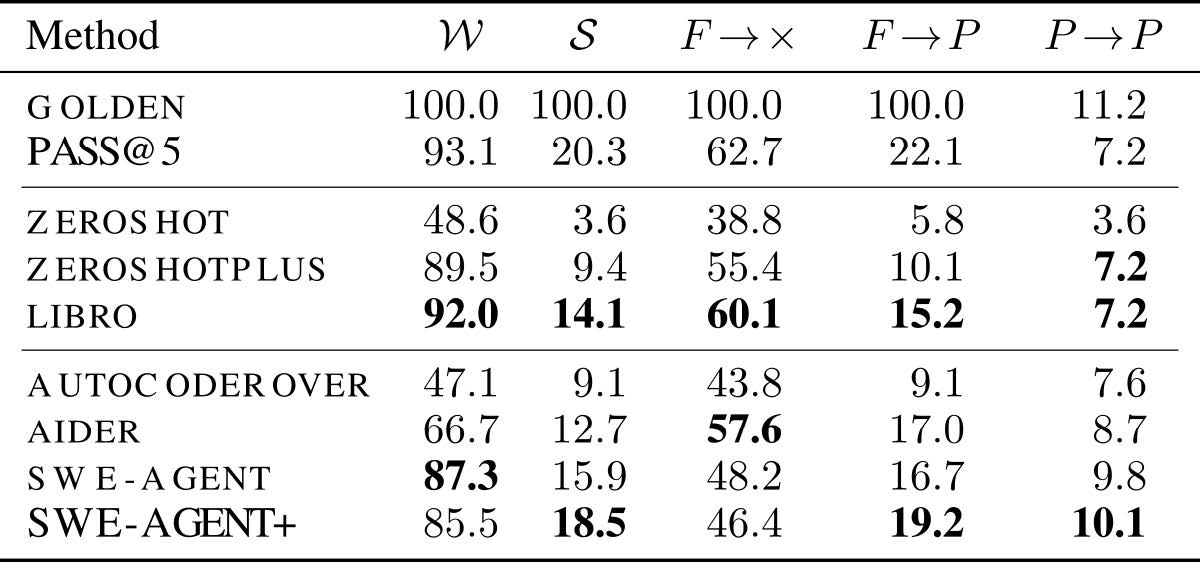

As it stands, Paskalev suggests that “even the best models and agents” out there are unable to resolve the majority of bugs they’re presented with - hence the team spying an opportunity for an AI startup that’s dedicated to improving these odds and delivering on the dream of less tedious app maintenance.

To this end, they are building atop large language models (LLMs) - such as OpenAI’s GPT or even China’s DeepSeek - taking a model-agnostic approach for their platform. This allows LogicStar to dip into different LLMs and maximize its AI agents’ utility, based on which foundational model works best for resolving a particular code issue.

Paskalev contends that the founding team has the technical and domain-specific knowledge to build a platform that can resolve programming problems which can challenge or outfox LLMs working alone. They also have past entrepreneurial success to point to: he sold his prior code review startup, DeepCode, to cybersecurity giant Snyk back in September 2020.

“In the beginning we were thinking about actually building a large language model for code,” he told TechCrunch. “Then we realized that that will quickly become a commodity… Now we’re building assuming all those large language models are there. Assuming there’s some actually decent [AI] agents for code, how do we extract the maximum business value from them?”

He said that the idea built on the team’s understanding of how to analyze software applications. “Combine that with large language models - then focus into grounding and verifying what those large language models and the AI agent actually suggest.”

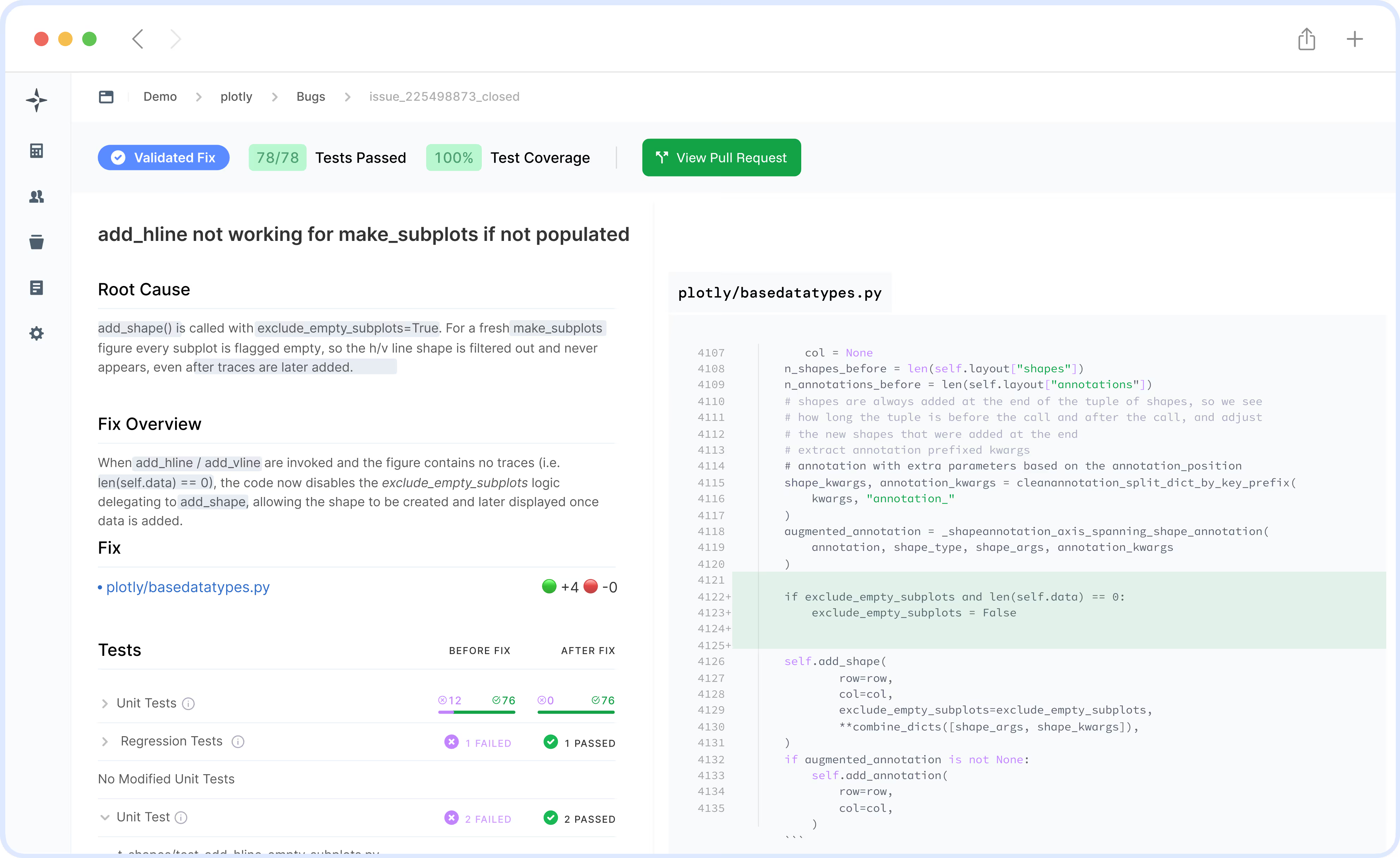

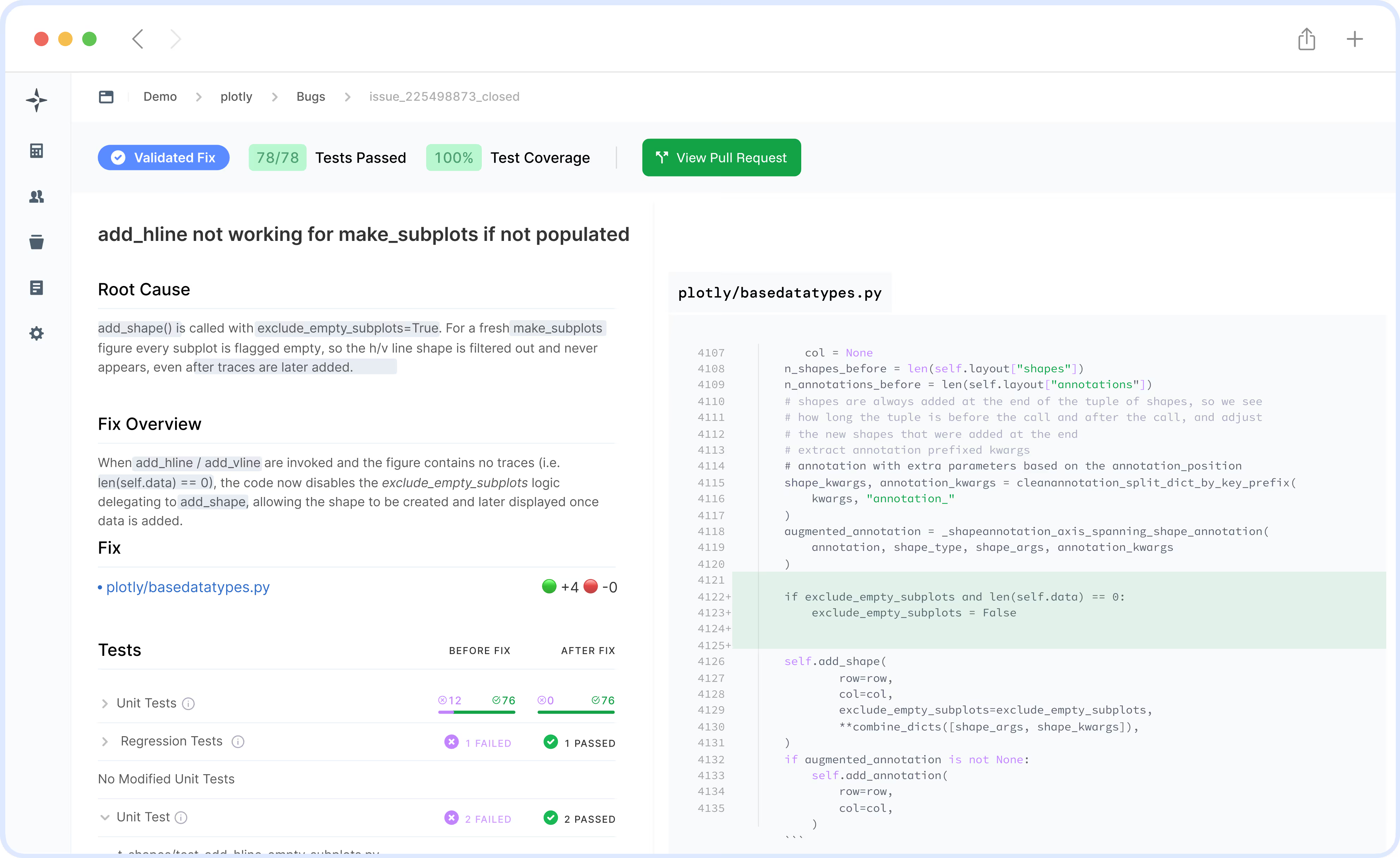

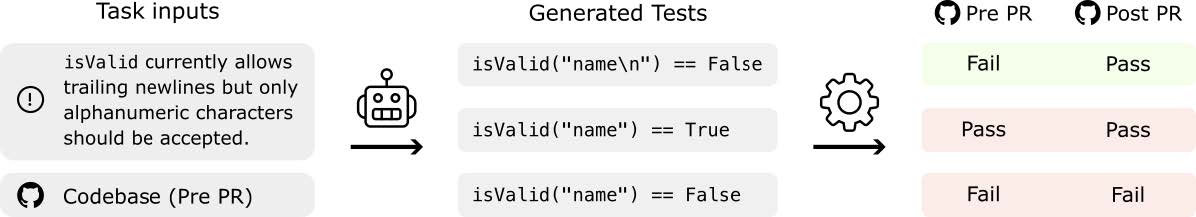

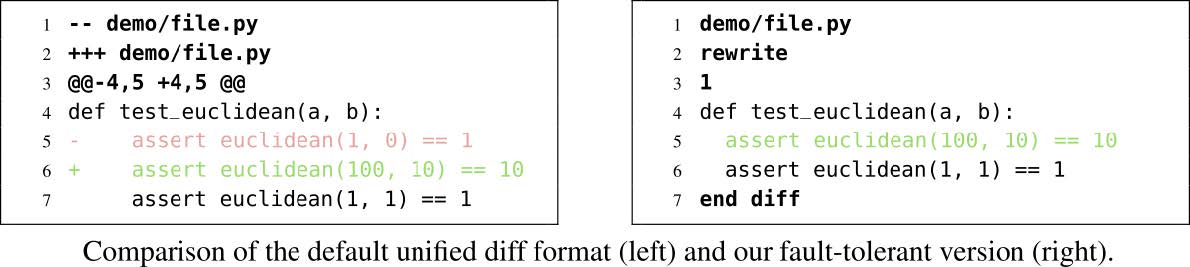

Test-driven development What does that mean in practice? Paskalev says LogicStar performs an analysis of each application that its tech is deployed on - using “classical computer science methods” - in order to build a “knowledge base”. This gives its AI agent a comprehensive map of the software’s inputs and outputs; how variables link to functions; and any other linkages and dependencies etc.

Then, for every bug it’s presented with, the AI agent is able to determine which parts of the application are impacted - allowing LogicStar to narrow down the functions needing to be simulated in order to test scores of potential fixes.

Per Paskalev, this “minimized execution environment” allows the AI agent to run “thousands” of tests aimed at reproducing bugs to identify a “failing test”, and - through this “test-driven development” approach - ultimately land on a fix that sticks.

He confirms that the actual bug fixes are sourced from the LLMs. But because LogicStar’s platform enables this “very fast executive environment” its AI agents can work at scale to separate the wheat from the chaff, as it were, and serve its users with a shortcut to the best that LLMs can offer.

“What we see is [LLMs are] great for prototyping, testing things, etc, but it’s absolutely not great for [code] production, commercial applications. I think we’re far from there, and this is what our platform delivers,” he argued. “To be able to extract those capabilities of the models today, we can actually safely extract commercial value and actually save time for developers to really focus on the important stuff.”

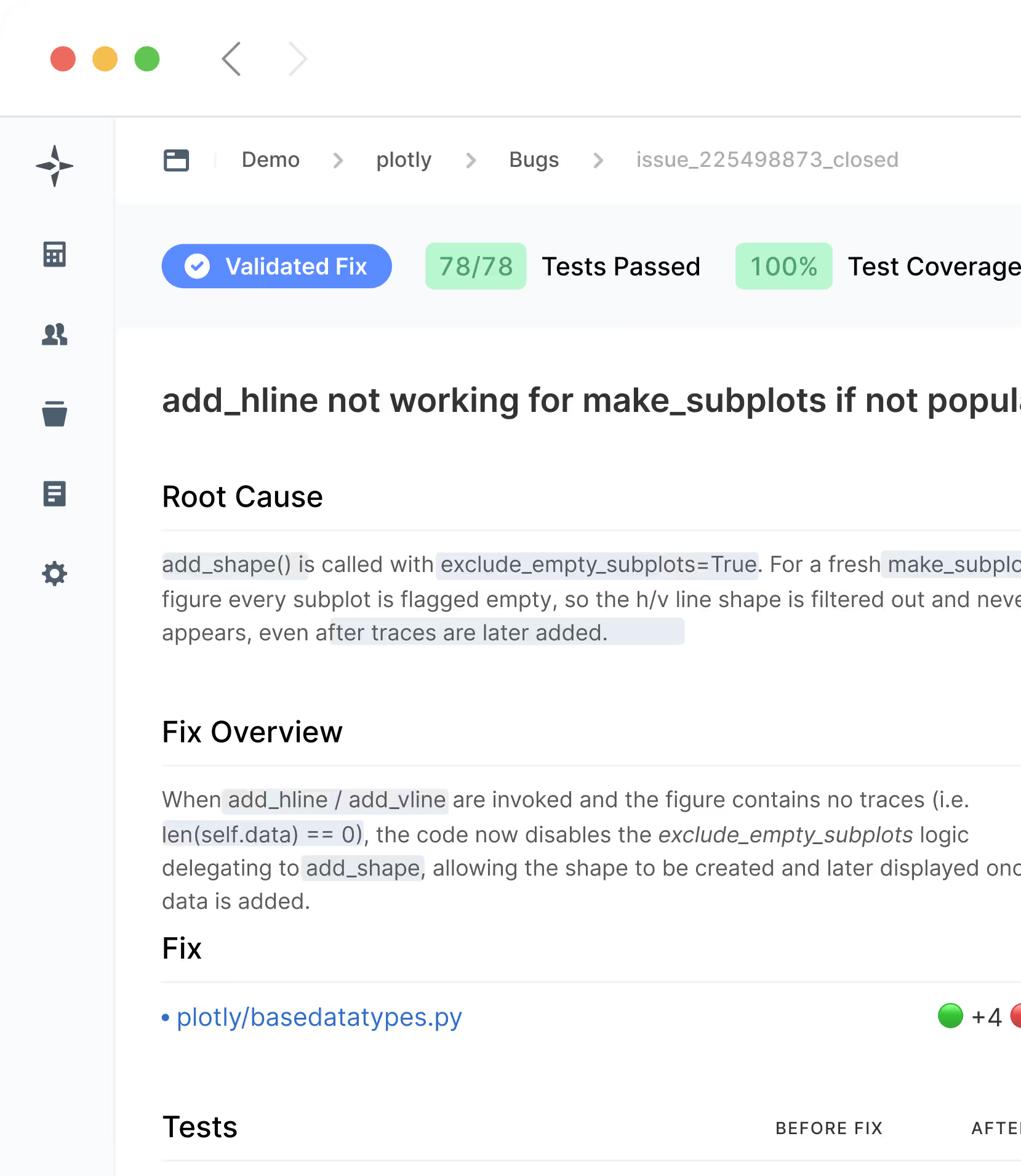

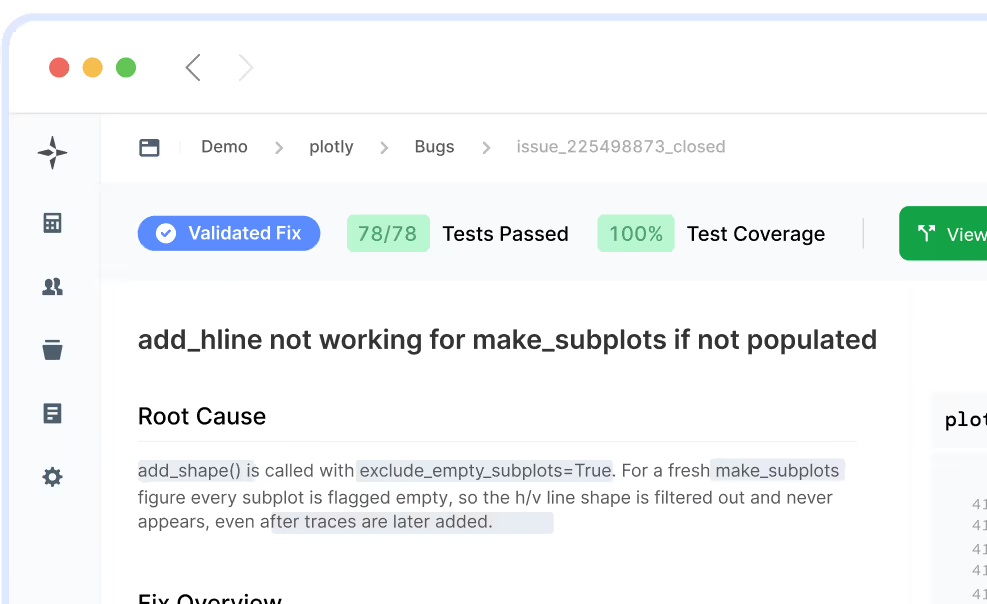

Enterprises are set to be LogicStar’s initial target. Its “silicon agents” are intended to be put to work alongside corporate dev teams, albeit at a fraction of the salary required to hire a human developer, handling a range of app upkeep tasks and freeing up engineering talent for more creative and/or challenging work. (Or, well, at least until LLMs and AI agents get a lot more capable.)

While the startup’s pitch touts a “fully autonomous” app maintenance capability, Paskalev confirms that the platform will allow human developers to review (and otherwise oversee) the fixes its AI agents call up. So trust can be - and must be - earned first.

“The accuracy that a human developer delivers ranges between 80 to 90%. Our goal [for our AI agents] is to be exactly there,” he adds.

It’s still early days for LogicStar: an alpha version of its technology is in testing with a number of undisclosed companies which Paskalev refers to as “design partners”. Currently the tech only supports Python - but expansions to Typescript, Javascript and Java are billed as “coming soon”.

“The main goal [with the pre-seed funding] is to actually show the technology works with our design partners - focusing on Python,” adds Paskalev. “We already spent a year on it, and we have lots of opportunity to actually expand. And that’s why we’re trying to focus it first, to show the value in one case.”

The startup’s pre-seed raise was led by European VC firm Northzone, with angel investors from DeepMind, Fleet, Sequoia scouts, Snyk and Spotify also joining the round.

In a statement, Michiel Kotting, partner at Northzone, said: “AI-driven code generation is still in its early stages, but the productivity gains we’re already seeing are revolutionary. The potential for this technology to streamline development processes, reduce costs, and accelerate innovation is immense. and the team’s vast technical expertise and proven track record position them to deliver real, impactful results. The future of software development is being reshaped, and LogicStar will play a crucial role in software maintenance.”

LogicStar is operating a waiting list for potential customers wanting to express interest in getting early access. It told us a beta release is planned for later this year. “