Closing the Agentic Coding Loop with Self-Healing Software

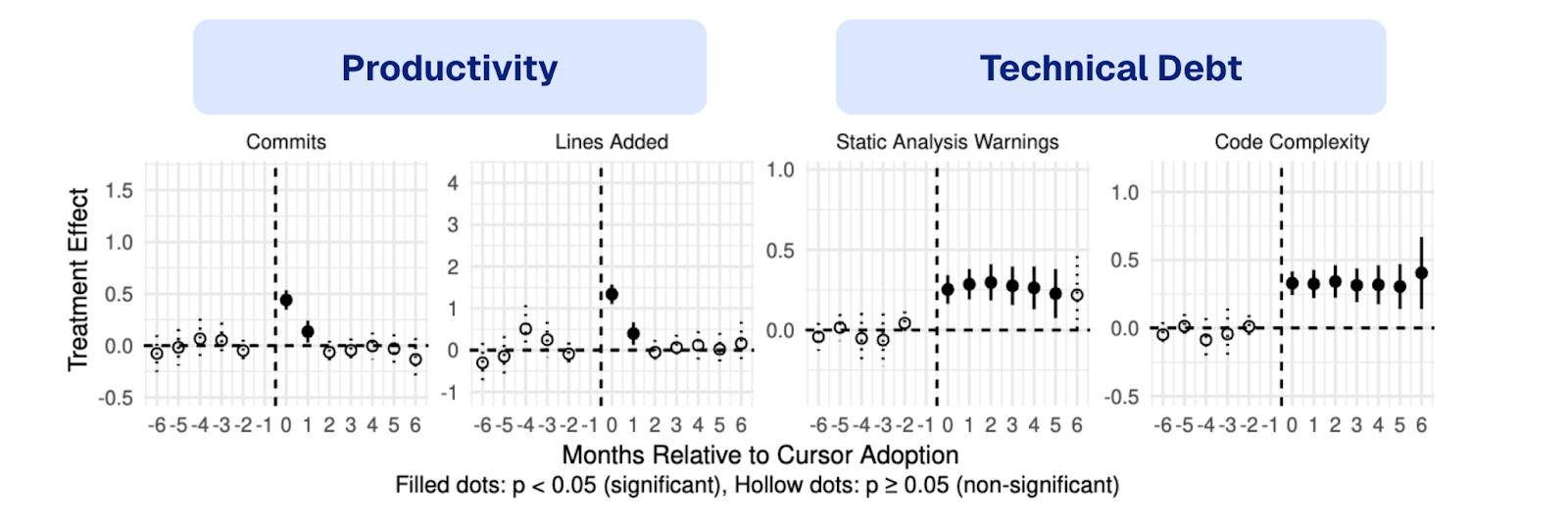

Over the past year, agentic coding tools like Cursor, Claude Code, and Codex have been adopted at remarkable speed. They already account for roughly 20% of public GitHub PRs [1] and teams using them report up to 50% productivity gains [2] in the early phases of adoption. But as review workloads spike and larger, more complex changes land faster than teams can absorb them, code quality begins to slip. The long-term benefits are far less clear.

In this post, we examine why today’s AI-assisted development workflows hit a wall and how Self-Healing Software can break through it.

[2] The AI Productivity Paradox Report

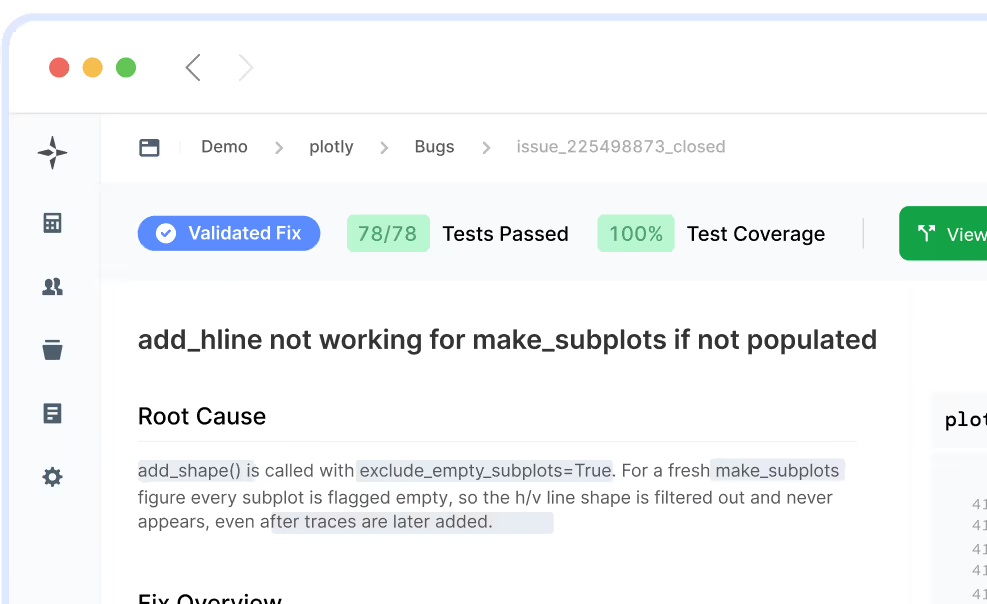

A recent CMU study [3] analyzing over 800 GitHub repositories that adopted Cursor identified a consistent pattern:

The takeaway is clear: when software can be produced faster than it can be reviewed, tested, and consolidated, quality becomes the limiting factor.

[3] He, Hao, et al. "Speed at the Cost of Quality? The Impact of LLM Agent Assistance on Software Development." arXiv 2025

Agentic coding tools don’t just help developers write code faster; they encourage writing more new code.

Analysing all public PRs on GitHub over the last 6 months, we find that AI-generated PRs tend to add significantly more lines than human-authored ones [1]. This is not just because LLMs generate verbose solutions. It reflects a deeper architectural problem:

In parallel, human reviewers now face larger, more complex PRs. Review quality drops, subtle bugs slip through, and duplicated patterns proliferate. The result is predictable: a burst of short-term acceleration followed by a plateau, or even slowdown, as technical debt accumulates and the codebase becomes harder to navigate and context more difficult to gather [3].

To achieve sustained acceleration, it isn’t enough for AI to write new features faster. We need AI that also maintains the ever-growing, ever-more-complex codebase.

This means building systems that can automatically:

In other words, software must be able to self-heal. As a result, development velocity will not just spike briefly before grinding to a halt, but grow sustainably as features get added while issues get automatically resolved.

At LogicStar, we build exactly this missing piece, a platform for self-healing applications.

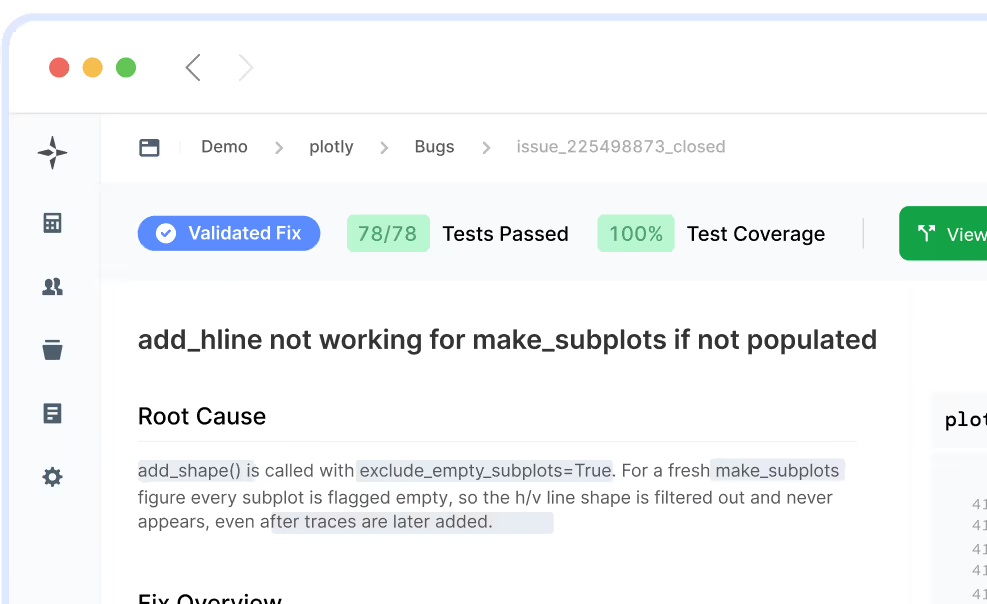

Our platform continuously analyzes applications, identifies real issues, generates candidate fixes, and verifies them using rigorous programmatic reasoning. This enables applications to become increasingly resilient, even as AI agents generate more of the underlying code.

A key advantage of LogicStar’s approach is how we understand the codebase. While most code agents use simple search tools like grep to explore a codebase, LogicStar builds a static-analysis–driven knowledge graph of the entire codebase. This persistent representation captures data flows, control flows, invariants, and component relationships that traditional agents must rediscover from scratch on every run. As a result, LogicStar can reason about bugs and validate fixes with far greater efficiency, depth, and consistency.

By leveraging this understanding to give software the ability to repair itself, we turn AI-driven feature development from a short-lived boost into long-term, compounding productivity.

Author: Mark Niklas Müller

Join the beta and let LogicStar AI clear your backlog while your team stays focused on what matters.

No workflow changes and no risky AI guesses. Only validated fixes you can trust.