SWE-Bench Verified – Best Fix Generation at 76.8%

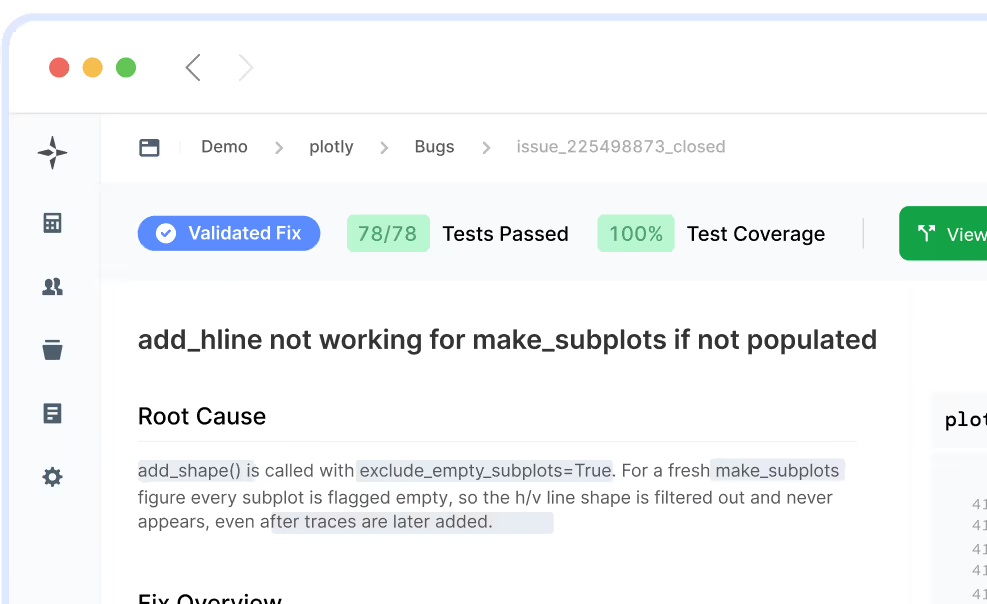

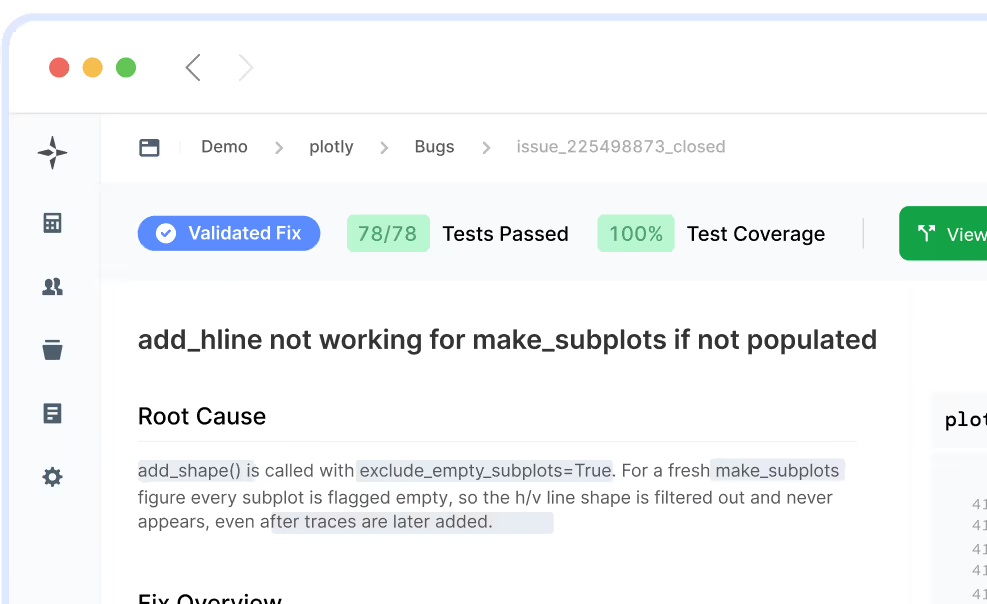

At LogicStar, our mission is to build a platform for self-healing applications. This relies on a strong bug-fixing backbone and review system working hand in hand to produce high-quality code fixes where possible, while abstaining rather than proposing incorrect fixes. We are therefore excited to announce that we not only have the best test generation system (announced last week) but also reached the state-of-the-art in fix generation with 76.8% accuracy on SWE-Bench Verified, the most competitive benchmark for automated bug fixing. Combining these systems, we achieve 80% precision, i.e., if our agent proposes a code fix, it is ready to merge 8 out of 10 times.

We are particularly proud that we achieved these results with our cost-effective production system rather than an agent carefully tuned for SWE-Bench and too expensive to ever run on customer problems. To achieve this, our L* Agent v1 leverages only the cost-effective OpenAI GPT-5 and GPT-5-mini, breaks down the bug fixing problem into clear sub-problems, and then orchestrates multiple sub-agents to investigate, reproduce, and fix the issue, before carefully reviewing and testing the generated code fix. All of this is enabled by our agent’s unique codebase understanding, powered by proprietary static analysis.

So, how does our L* Agent work and why is it so cost-effective? The main insight is to combine a strong model (GPT-5), generating baseline patches and tests, with diverse cheaper agents based on GPT-5-mini, to increase diversity before picking the best patch using our state-of-the-art tests. All of this is enabled by our static-analysis-powered codebase understanding, which boosts the performance of both the weak and strong models.

We prioritize correctness and validation over speed, processing all issues asynchronously, as soon as they appear in your bug backlog or observability. This approach ensures you don’t have to waste time manually triaging and reviewing issues but simply receive high-quality patches from LogicStar for the issues we can solve confidently. We are now turning this technology into a loveable product, and invite you to sign up as a design partner if you’d like to help us build a system that will reliably maintain your code. While SWE-Bench is an important benchmark, it’s only part of the story — we are developing our agents for real-world use and not only benchmarks, so be sure to follow us for more updates.

Join the beta and let LogicStar AI clear your backlog while your team stays focused on what matters.

No workflow changes and no risky AI guesses. Only validated fixes you can trust.