How We Made SWE-Bench 50x Smaller

Evaluating coding agents shouldn’t feel like watching paint dry. Yet with SWE-Bench Verified, it often does—hundreds of Docker images totaling 240 GiB, throttled by rate limits*, turn the first setup on a new machine into a 30-hour ordeal. Want to test across a broader, less overfitted, and more representative set of repositories, by also using our SWA-Bench and SWEE-Bench or your own environments? Good luck; things only get slower.

So we decided to fix that. By restructuring layers, trimming unnecessary files, and compressing the results, we shrank SWE-Bench Verified from 240 GiB to just 5 GiB. Now it downloads in under a minute, making large-scale evaluation and trace generation on cloud machines fast and painless.

*100 images per 6h as an unauthenticated user, 200 as an authenticated user without Docker Hub Pro

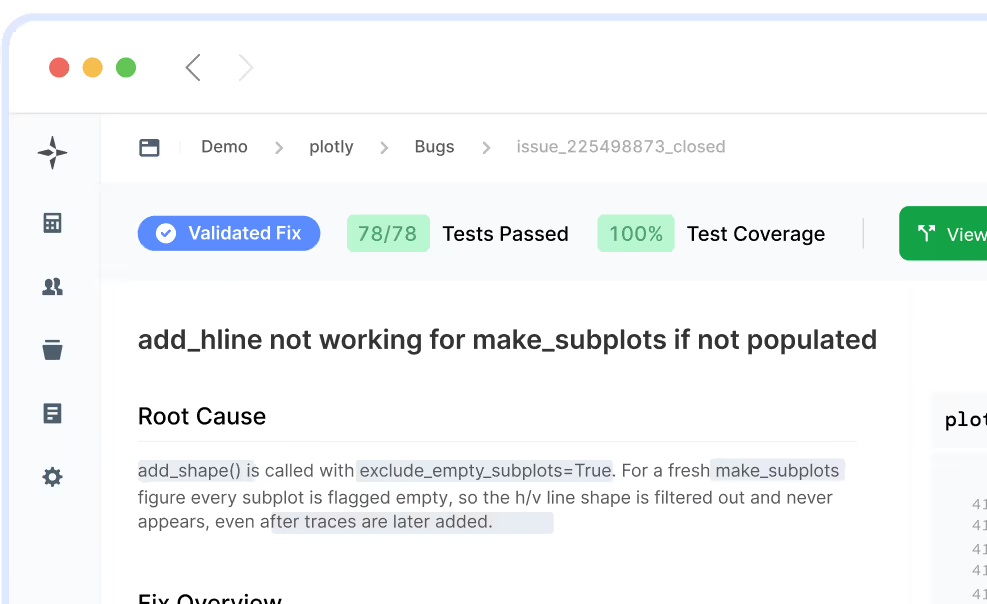

Evaluating SWE-Bench Verified requires 500 containerized environments, one for each issue across twelve repositories. Your options are either to build all of them from scratch (and pray all dependencies were pinned) or to pull the prebuilt images from Docker Hub. Neither choice is great. Building takes hours and can introduce inconsistencies. Pulling requires downloading more than 100 GiB of compressed layers and expanding them into 240 GiB of local storage. Even with a Docker Hub Pro subscription and a fast connection, this process takes anywhere from half an hour to several hours. Without a Pro account, rate limits make it even worse—you can spend 30 hours just waiting for pulls to finish.

The situation becomes truly painful if you want to evaluate more instances at scale on ephemeral cloud machines. Copying 100s of GiB around the world hundreds of times adds up quickly. So we set out to make the environment images light enough to be dropped onto a fresh machine in minutes.

At the core of every Docker image lies a stack layers representing filesystem changes. When a container runs, Docker (via OverlayFS) looks for the topmost layer containing a requested file and reads it from there. The container itself adds a thin writable layer on top: when you modify a file, Docker copies it into this writable layer so changes never affect the underlying image layers.

This design is clever because it makes image storage and distribution efficient. If two images share a base like ubuntu:latest, they can both use the same base layer and only add their own differences on top. However, every file that is modified is fully duplicated.

For SWE-Bench, every image starts with ubuntu:22.04. Then comes one of 63 distinct “environment” layers that set up dependencies, and finally one of 500 "instance" layers, including the repository checkout at the right commit.

The problem is that while the environment layers share many dependencies and repositories change very little between commits, the resulting layers are still different. As a result, full copies are created every time. While every checkout is only a few hundred megabytes, that quickly adds up when multiplied by 500 instances.

In short, the way SWE-Bench (Verified) images are constructed leads to hundreds of near-duplicate layers adding up to 240 GiB.

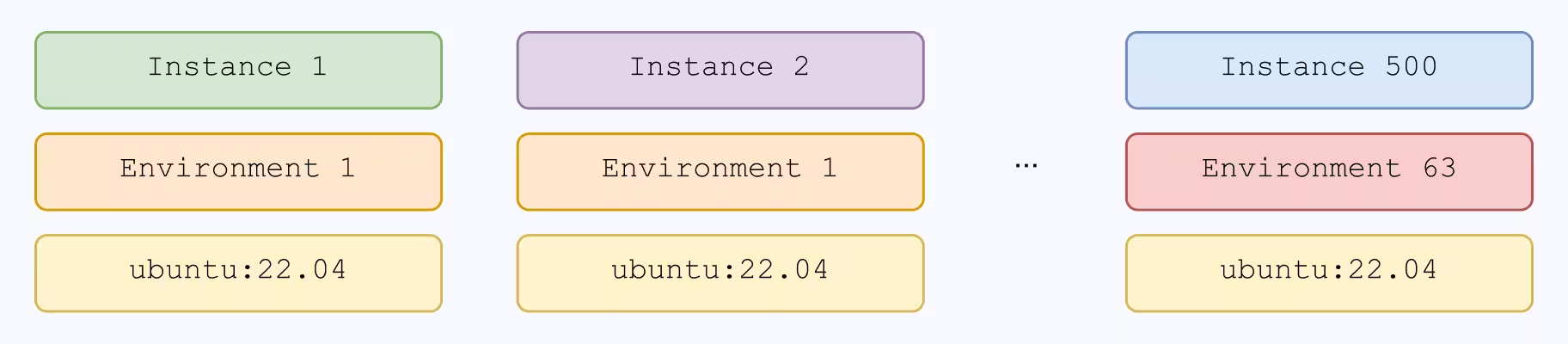

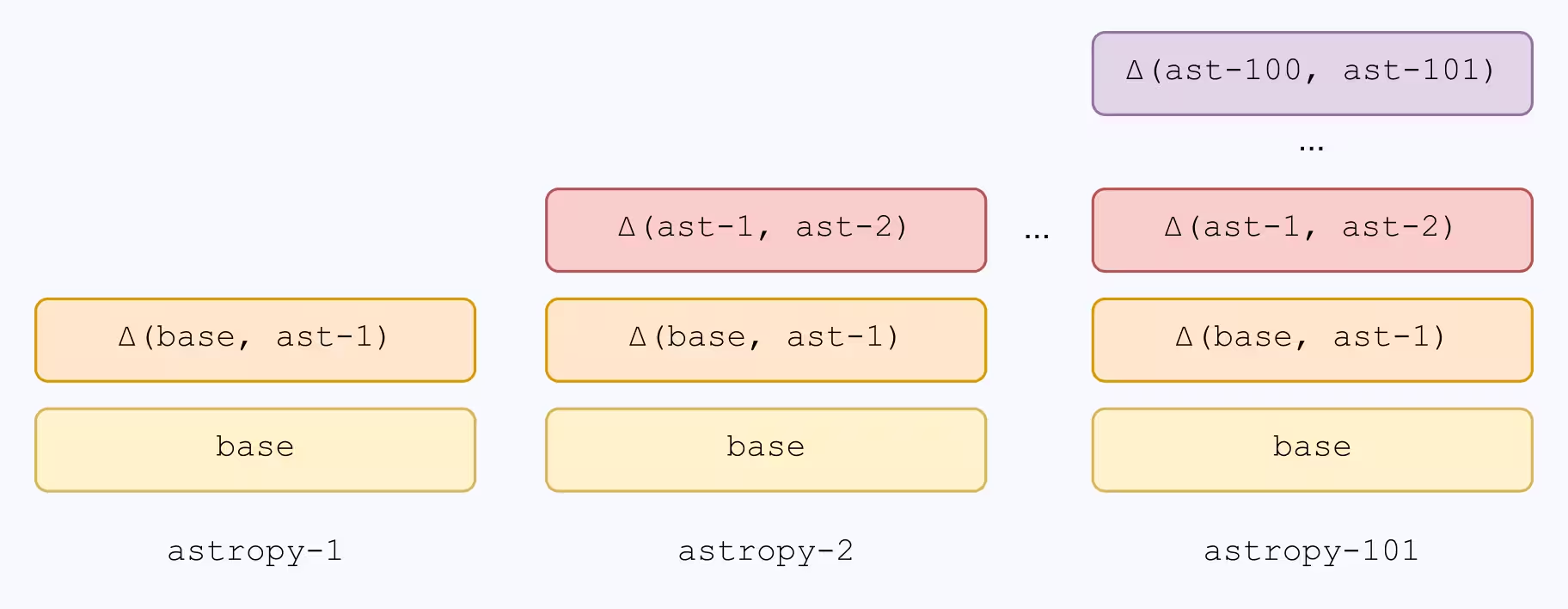

To resolve this, we introduce a technique we call delta layering. Instead of creating a single layer for every checkout containing a full copy of the repository, we post-process the images so that each instance layer only adds the difference - the delta - to the commit before.

The intuition is simple: two snapshots of the same repository taken only a few weeks apart are nearly identical. Yet in the default layering scheme, both snapshots get packaged as full copies; delta layering removes that duplication.

We build chronological chains—one per repository—where each instance builds directly on top of the previous one. The resulting layers become small changes between commits (including potential dependency changes), instead of big, redundant snapshots. Only Django had so many instances that we had to split it into two chains due to Docker’s hard limit of 125 layers per image.

All of these chains share a common base layer that holds the truly universal pieces - Ubuntu 22.04, Conda, and other system-level dependencies.

Could we get the same result by just cloning the chronologically last state of the repo and then checking out the right commit? Unfortunately, no. This would leave future commits in the git history, which can and did get exploited by agents to cheat.

Delta layering solves much of the duplication problem, but there’s a hidden complication: git history. Each SWE-Bench image includes the full git history of the repository up to the point when the issue was created. In principle, this shouldn’t be a huge deal. Git stores its data as a key–value database of objects: commits, trees, and blobs. Adding a new commit just creates a few new objects-the changed files, changed directories, and the commit object itself. If everything were stored as loose zlib-compressed files in .git/objects, delta layering could simply capture the handful of new objects.

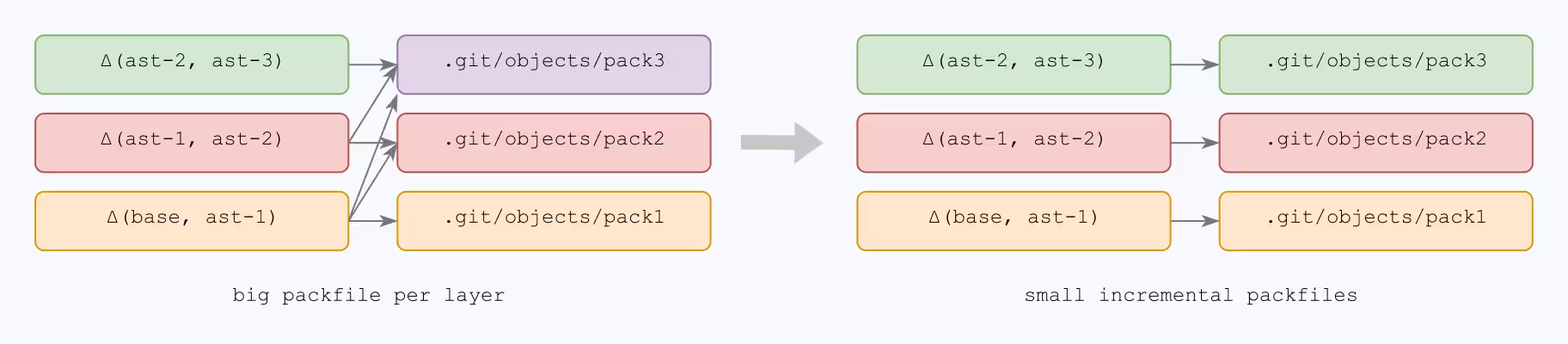

But in practice, git uses packfiles. A packfile bundles thousands of objects into a single large file and applies compression across them. This is great for efficiency, but the problem is that every time a new packfile is generated, that’s an entirely new multi-hundred-megabyte file from Docker's perspective. As a result, all the benefits of delta layering vanish.

To resolve this problem, we restructured the packfiles, creating one per instance, containing all additional git objects. We do lose some of git’s internal compression, but the trade-off is worth it: small, incremental layers instead of massive redundant packfiles.

Many of the images contained leftovers from the build process that were never needed at runtime—installers, caches, etc. For example, the Miniconda installer alone added 136 MB to every image. Pip and Conda caches consumed even more. Removing these shaves off gigabytes at essentially no cost.

In addition to making each layer as small as possible, we also apply cross-layer compression. While Docker’s layer model copies the entire file when a single line changes, compression algorithms are very good at spotting such repeated data.

We choose zstd because it’s fast, highly parallel, and supports very large compression windows. To give the compressor the best shot, we sorted the layers by their chronological chain order. That way, nearly identical layers sit next to each other in the input stream. As a result the entire benchmark, 240GiB of raw images, now fits into a single 5 GiB archive.

Using 100 cores, the compression process below takes around ten minutes. Decompression, however, is extremely fast—about forty seconds on a single core.

All told, our optimizations bring SWE-Bench Verified down from 240 GiB of raw layers to just 31 GiB uncompressed—and with the right compression, a single archive of only 5 GiB. That archive is small enough to download and unpack in about five minutes on any modern machine. And the best thing, the core of our optimization – delta layer – is not SWE-Bench specific and can be easily applied to any other series of execution environments. Because Docker and Podman can’t natively load compressed bundles, we’ve provided helper scripts on GitHub. The final archive itself is hosted on Hugging Face, supporting fast downloads.

If all you care about is the quickest way to set up SWE-Bench Verified, here it is:

Execution environments are not only essential for evaluating code agents but also for training code models. Regardless of whether you do RL or SFT, generating high-quality training data requires diverse agent traces, which in turn require a large number of execution environments. Execution environments which we can now efficiently store and distribute to a large number of ephemeral machines to generate a large number of traces…

Stay tuned to learn more about what comes next.

Authors: Christian Mürtz & Mark Niklas Müller

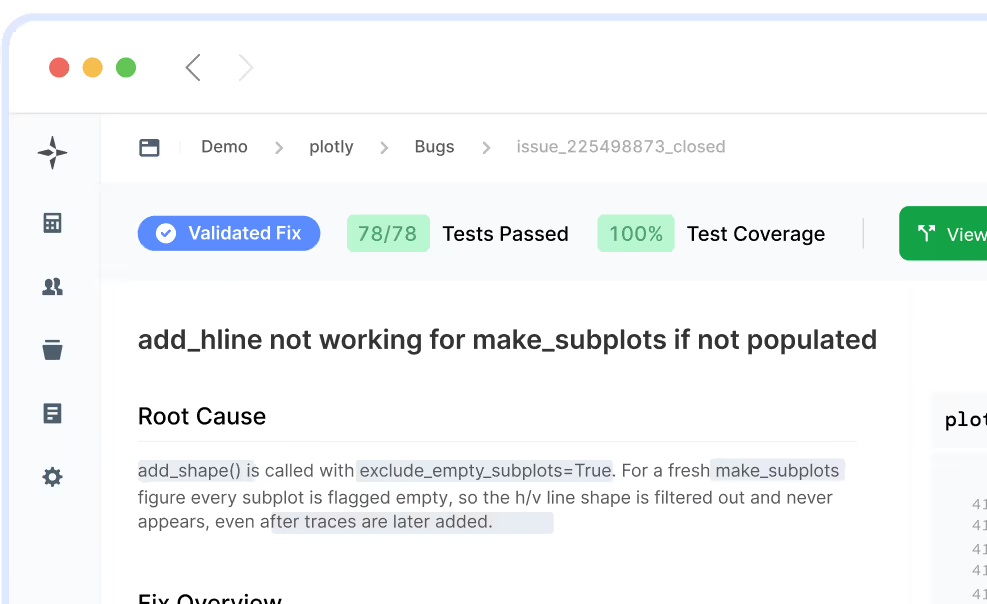

Join the beta and let LogicStar AI clear your backlog while your team stays focused on what matters.

No workflow changes and no risky AI guesses. Only validated fixes you can trust.